Introduction

Markdown has become the mainstream markup language across technical documentation, open-source projects, blogging, and more. However, most translation tools struggle to preserve Markdown’s original structure—especially when handling code blocks, LaTeX formulas, or structured metadata—often leading to broken formatting or lost semantics.

md-translator is designed to solve this problem by delivering high-quality translations while accurately preserving Markdown formatting. Its "Plain Text Translation Mode" further allows it to handle virtually any type of text document, enabling flexible language conversion with structural retention. Currently supporting over 70 languages, it can output multilingual results simultaneously—empowering content globalization.

Key Features

Native Support for Markdown Structures

md-translator is deeply adapted for Markdown and can recognize and restore the following common syntax elements:

- FrontMatter metadata (

---) - Headings (

#) - Blockquotes (

> quote) - Links (

[text](URL)) - Unordered lists (

-/*/+) - Ordered lists (

1. 2. 3.) - Emphasis (

**bold**,_italic_) - Code blocks (

```) - Inline code (

`code`) - Inline LaTeX formulas (

$formula$) - Block-level LaTeX formulas (

$$formula$$)

Translation of FrontMatter, code blocks, and LaTeX formulas is optional and can be flexibly configured based on user needs.

Context-Aware Translation

Markdown documents also support Context-Aware Translation mode (AI models only). This mode slices the document into segments and sends them to the large model with preceding and succeeding context, significantly improving coherence between paragraphs and consistency of terminology.

Due to the complexity of Markdown structures, enabling context mode may increase the risk of model output formatting errors (e.g., unclosed code blocks, disordered list indentation). It is recommended to closely monitor the format integrity of the translation results when using this mode.

“Plain Text Translation Mode” for Any Document

Beyond structured Markdown support, md-translator offers a “Plain Text Translation Mode” that bypasses format parsing and directly translates raw content. Whether it's Markdown, TXT, HTML, log files, or even messy technical notes, this mode ensures accurate and efficient translation.

You can also enhance consistency in terminology, context coherence, and translation style by customizing AI prompts—tailoring the output to your actual writing needs.

RTL Language Support

Automatically detects and adjusts text direction for RTL languages like Arabic, Hebrew, Urdu, and Persian.

Additional Feature: Extract Clean Plain Text

md-translator includes the ability to convert Markdown content into clean plain text, making it easier for post-processing or semantic analysis:

- Automatically removes all Markdown syntax

- Hides technical content like code blocks and links

- Outputs plain text ideal for summarization, search indexing, or NLP tasks

This feature is especially useful for automated applications like content summarization, semantic analysis, or knowledge graph construction.

Use Cases

- Bulk translation of multilingual technical documents

- Internationalization of open-source project documentation

- Bilingual synchronization of Markdown blog content

- Format-preserving translation of mixed content (code, formulas, etc.)

- Semantic translation and extraction for any structured/unstructured text

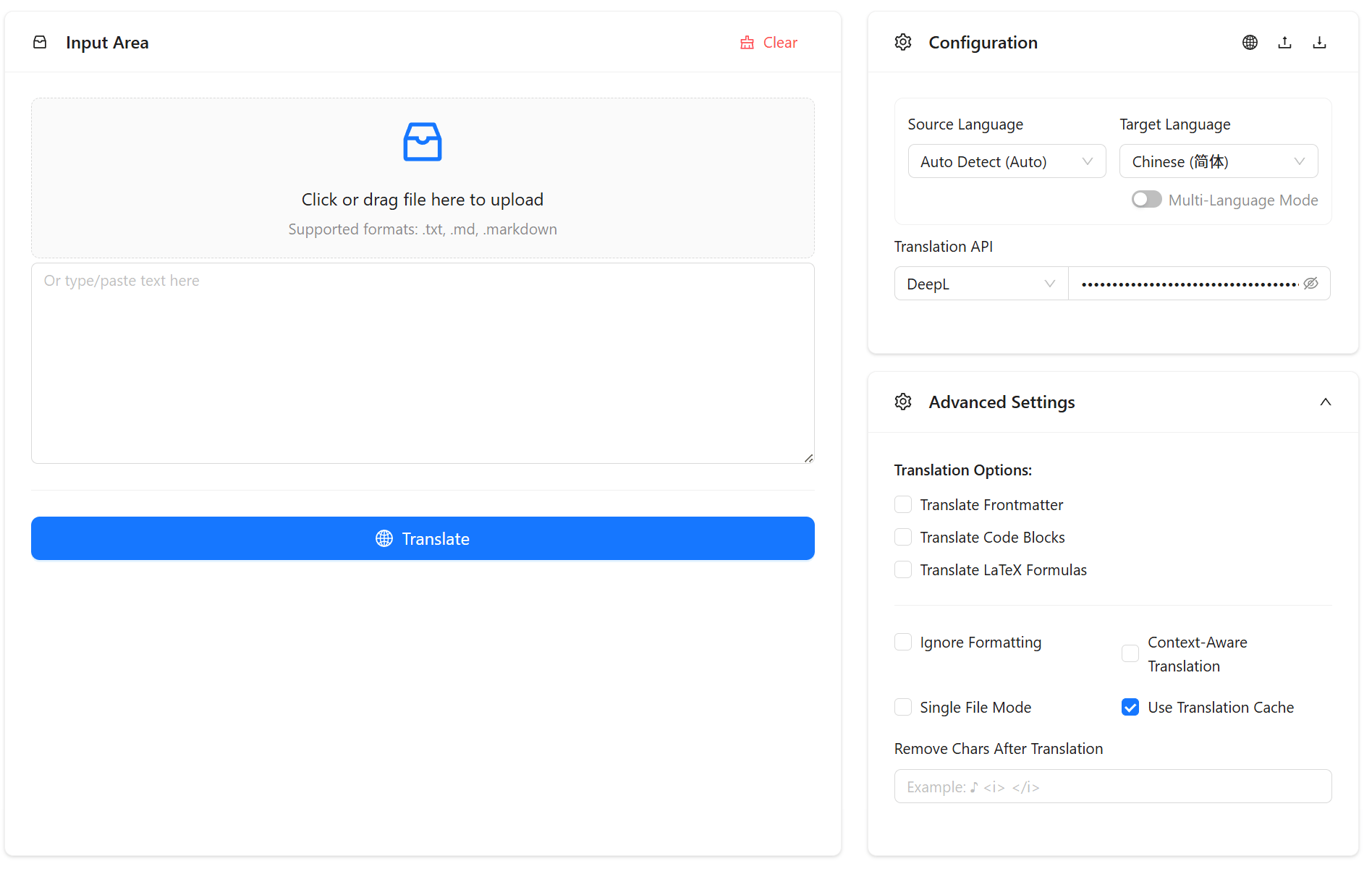

Configuration Instructions

The application offers a wide range of translation configuration options. For detailed information, please refer to:

- Interface Overview: Features such as translation caching and multilingual translation, as well as parameters like chunk size and delay time.

- Translation API: Learn about the supported translation interfaces and AI large models.